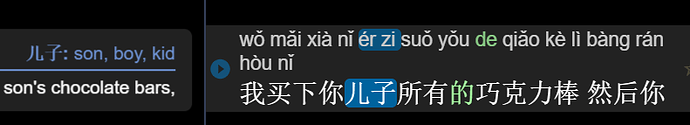

@David_Wilkinson Oh and in addition to those contextual pinyin differences for the same characters used in different words or parts of speech in standard Mandarin Chinese, there’s also the complication that Taiwanese Mandarin differs in pronounciation and vocabulary from that about as much as British English does from American English.

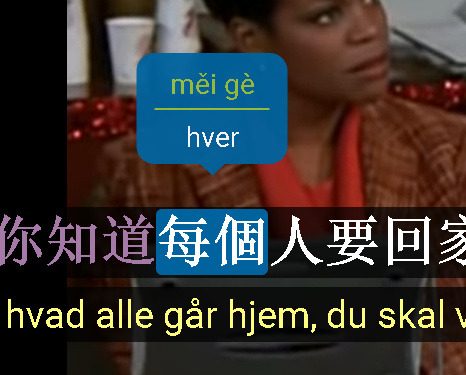

This means that the correct pinyin for Taiwanese Mandarin speech will be different to what’s correct for content from Chinese television, because the same characters should have different tones to reflect how they’re said by Mandarin speakers in Taiwan. Often, the correct pinyin for Taiwanese Mandarin is wrong for standard Mandarin Chinese and vice versa.

Additionally, Taiwanese Mandarin doesn’t tend to use the neutral tone for the second character in double character words. It also has a lot of distinctly different vocabulary, so the translations would be different for the same words, and it has more/different loan words.

So when I’ve complained in the past that I can’t find ‘Simplified Chinese’ content on YouTube through LLY and everything is ‘Tranditional Chinese’, I’m actually complaining that I can only find Taiwanese Mandarin content which is using the wrong tones for my target language a lot of the time, and a different accent and dialect - which is all very much something that I plan to learn familiarity with later on, but is actively unhelpful with immersion learning right now.

Right now I want to be thoroughly immersed in Chinese TV dramas, documentaries, social media videos and films that are all using the tones from standard Mandarian Chinese (which frankly has enough accents and dialects already).

Hopefully, in a year or so from now, I’ll have the tones and vocabulary from standard Mandarin thoroughly ingrained, and then I’ll be ready to start learning how Taiwanese Mandarin differs in vocabulary, tones and pronounciation.

Equally, given that there’s a lot of Taiwanese Mandarin content on YouTube, you probably want to be offering the correct pinyin and definitions when your LLY users are choosing to watch Taiwanese content (like the Taiwanese dubs of Steven Universe and classic Disney movies).

Here’s a relevant article on that https://www.theworldofchinese.com/2014/05/taiwanese-mandarin-starter-kit/

Amazing job David!

Amazing job David!